Community Embraces New Word Game at Mid-Year Play Day This past Sunday, families at Takoma Park’s Seventh Annual Mid-Year Play Day had the opportunity to experience OtherWordly for the first time. Our educational language game drew curious children and parents to our table throughout the afternoon. Words in Space Several children gathered around our iPads […]

Read more Cloud computing is a metaphorical term for hosted services on the Internet. This can be infrastructure (i.e., raw equipment), platforms (e.g., operating systems, basic software like databases or web servers), or software (e.g., content managements systems, social networking software). Typically, it is sold on metered basis, like a utility charges for water.

Cloud computing is a metaphorical term for hosted services on the Internet. This can be infrastructure (i.e., raw equipment), platforms (e.g., operating systems, basic software like databases or web servers), or software (e.g., content managements systems, social networking software). Typically, it is sold on metered basis, like a utility charges for water.

Serious cloud computing is done in massive data centers, measured in multiples of football fields. The centers have redundant connections to the Internet, power direct from one or more local utilities, diesel generators, vast banks of batteries, and huge cooling systems. Centers house tens or hundreds of thousands of servers. Google has approximately 900k servers. The servers are small computers (think of a stripped-down motherboard from a laptop computer, plus a hard drive) stacked into towers. See videos below.

The latest trends involve slashing power usage, and containerizing (thousands of servers packed into shipping containers, which are plugged directly into the power, network, and cooling systems in a warehouse).

The scale is mind boggling, and so there are relatively few companies setting these up. Recently, a few companies have released videos. You can jump through these videos, and tune out the jargon.

Microsoft

New tour released this week.

A tour from 2009 of Google’s first container-based data center

And here’s a video focused on security:

From a tour 3 months ago:

And a more promotional video:

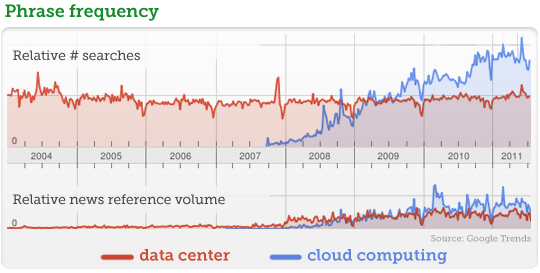

Explosion since 2007

The term ‘cloud computing’ traces back to at least the 1980’s, and has exploded in popularity since 2007:

Organizations have been outsourcing the complex details of hosting many servers in data centers for years. Ever since the start of the internet, there have been “content delivery networks” like Cambridge, MA based Akamai who specialized in placing warehouses of servers throughout the world, and then routing web surfers to the nearest network. This was a workaround for the problem of a slow internet. Even with light-speed fiber optics, many factors slow down transmission speeds, and reducing the number of steps between a web user and the server usually translates into speed. For example, The New York Times outsources hosting of its images to Akamai. In fact, although it’s not a household name, at any-given time, 15-30% of the world’s web traffic is carried by Akamai’s network of cloud facilities. Akamai has nearly 100k servers deployed in 72 countries, spanning most of the networks that make up the internet. Other major players include Google, Microsoft, and Amazon.com.

Many organizations host their web sites on “dedicated servers” or “virtual private servers” for their web hosting, which are managed in remote data centers. For example, the New York Times uses dedicated servers at NTT America, a subsidiary of NTT (Nippon Telegraph and Telephone Corporation). NTT is one of a dozen “Tier 1” providers. (IDEA also hosts our web sites on dedicated servers at NTT.)

What’s new is the proliferation of platforms and software — such as hosting all of an organizations’s email, or museum collection, or customer database, in the cloud. Ofter this is abstracted so the data is actually stored on multiple servers, which is the key to scaling to large numbers of users. My prior post on building social networks touches on this problem, that if you run our own software or use software designed to run on just one server, you limit the number of concurrent users. Setting up software to spread load across multiple servers is a hard problem, which is partially solved by many of today’s cloud computing providers.

Update: 1-Aug-2011: Added mention of Google’s estimated 900k servers.